Continuous Integration: What, Why, How?

Two everyday words which when combined, equal a powerful tool for reducing the scope of changes to limit their effect on a software system - Continuous Integration. In this post, I'll retrofit CI to an existing open source project according to its requirements to give the project's lifecycle greater assurance and reliability.

](https://cdn.hashnode.com/res/hashnode/image/upload/v1621357354820/t5l9ZvB-V.jpeg?auto=compress,format&format=webp)

What is it?

At the heart of continuous integration lies the idea that the more we monitor the combination of changes on the smallest scale, the more in-tune we are to problems. As we've all come to experience, linearly managed software projects can be a nightmare when a significant issue is detected in the later parts of the lifecycle, such as two big, core features being merged and creating all kinds of conflicts and chaos. Shudder. So, we use CI tools to define repeatable, automated processes of tasks required by that software system and its tools.

What's its value?

As we know, CI tools take our definitions and procedures in the form of an editable configuration and reliably automates their execution, minimising as many variables as possible. Overall, this gives us the ability to limit incompatibilities, conflicts, bugs, and general pain-in-the-arse problems now and in the future by defining a suite of requirements and performing verifications upon the outcome of tasks.

](https://cdn.hashnode.com/res/hashnode/image/upload/v1621361939269/eNaNaCfpj.png?auto=compress,format&format=webp)

What options exist?

Having used similar CI tooling in my professional work, I thought it about time I began tinkering with it on GitHub projects. After all, gaining a wider experience of other CI tool and integration methods with different toolsets and languages is no bad thing! There's Jenkins, GitLabCI, GoCD, TeamCity, Gulp, Grunt - the list goes on. Really, the tool you pick often reflects the environment and languages you're working with, but, services like TravisCI and GitLabCI enable CI to be integrated with version control as a remote service. Neat.

I started small by adding it to an existing .NET WinForms project, MedClerk. Being a project of little significance other than academic, it was a great candidate CI pipelines as it features both build and test stages, neither of which are particularly complicated. So, as with any problem, let's break it down into its component parts.

Pick your poison

Firstly, as we don't plan to roll my own continuous integration tool since a number of clever folks have already tackled such problems, my decision was reduced to "Which free service provides a great functional experience and best serves my minor needs?". As it turned out, TravisCI won this round due to its popularity, a wide range of supported platforms and languages ecosystems, and painless integration with GitHub. First things first - sign up to TravisCI and activate the repositories it may access. Check.

Prepare yourself

You might be thinking "This all sounds rather complicated, and we're only now getting to configuration? Oh...". Alas, fear not! Like most CI systems, TravisCI utilises modern key-value syntax to define its operations - in this particular case, it's YAML. By storing this configuration repository-side, it makes the whole CI process versionable alongside the project such that the whole process remains in synchronised and under our control.

Let's get our ducks in a row - documentation. We're going to need docs to understand the configuration file schema, available packages, and any special cases. Thankfully, TravisCI has fairly pleasing documentation aimed and getting you the quickest standard working solution per your environment. With a glance through TravisCI's core concepts and beginners tutorial over a coffee, I was ready to tackle the .NET documentation.

Configuration

Here comes the fun. Since we can view the output of our build tasks on TravisCI, let's make this easy on ourselves and gradually build the script up.

So, create, commit, and push the file TravisCI expects named .travis.yml. As one might expect from an empty file, Travis continued about his day without regard for my project. So let's give him something to be interested in and enter in some basic environment information - what environment we need, and what he'll be building.

language: csharp

solution: MedClerk.sln

Knowing Travis will handle all generic environment configuration (e.g. installing ssh and git to allow project cloning), we still need to tell him what to execute this build with. In this case, we have two choices: .NET Core or the mono framework. In fact, we can tell it to use both if we wished and execute two build phases to ensure we're compatible with both:

language: csharp

solution: MedClerk.sln

matrix:

include:

- dotnet: 2.1.502

mono: none

- mono: latest

This is a bit beyond my needs at the moment, but is one to think about! This would produce two build phases with dedicated environments, one for each runtime, to avoid their interfering with one another, the project, or build tasks. So let's make it simple and use mono, but install the dotnet runtime for later. So far, our config looks like this:

language: csharp

mono: latest

dotnet: 2.1.502 #a recent stable suggested for optimal travis happiness

solution: MedClerk.sln

Now that Travis knows what he's doing, let's tell him a few things we need and how to get them:

language: csharp

mono: latest

dotnet: 2.1.502

solution: MedClerk.sln

install:

- nuget restore MedClerk.sln

- nuget install NUnit.Console -Version 3.9.0 -OutputDirectory testrunner

Since this project uses NUnit3 via NuGet, we'll restore the packages for all projects under the solution and ensure the NUnit runner is available. Having done that, it's now a case of telling Travis what we want him to work his magic on - the build and tests.

language: csharp

mono: latest

dotnet: 2.1.502

solution: MedClerk.sln

install:

- nuget restore MedClerk.sln

- nuget install NUnit.Console -Version 3.9.0 -OutputDirectory testrunner

script:

- msbuild /p:Configuration=Release MedClerk.sln

- mono ./testrunner/NUnit.ConsoleRunner.3.9.0/tools/nunit3-console.exe ./MedClerk.Test/bin/Release/MedClerk.Test.dll

And there we go, la fin (or so I thought). Here, we're telling Travis that this phases jobs consist of a build stage via the msbuild command line tooling (the beating heart of Visual Studio). In the real world, since we'd want to be building and testing the version of the software the end-user will interact with, we use the /p:Configuration=Release parameter to build to Release standards. The second half of the script tells Travis to use the earlier installed nunit test runner via mono and provides it with our release test build .dll.

If you're an experienced TravisCI-er in the .NET world you are likely screaming at your screen that problems lie ahead.

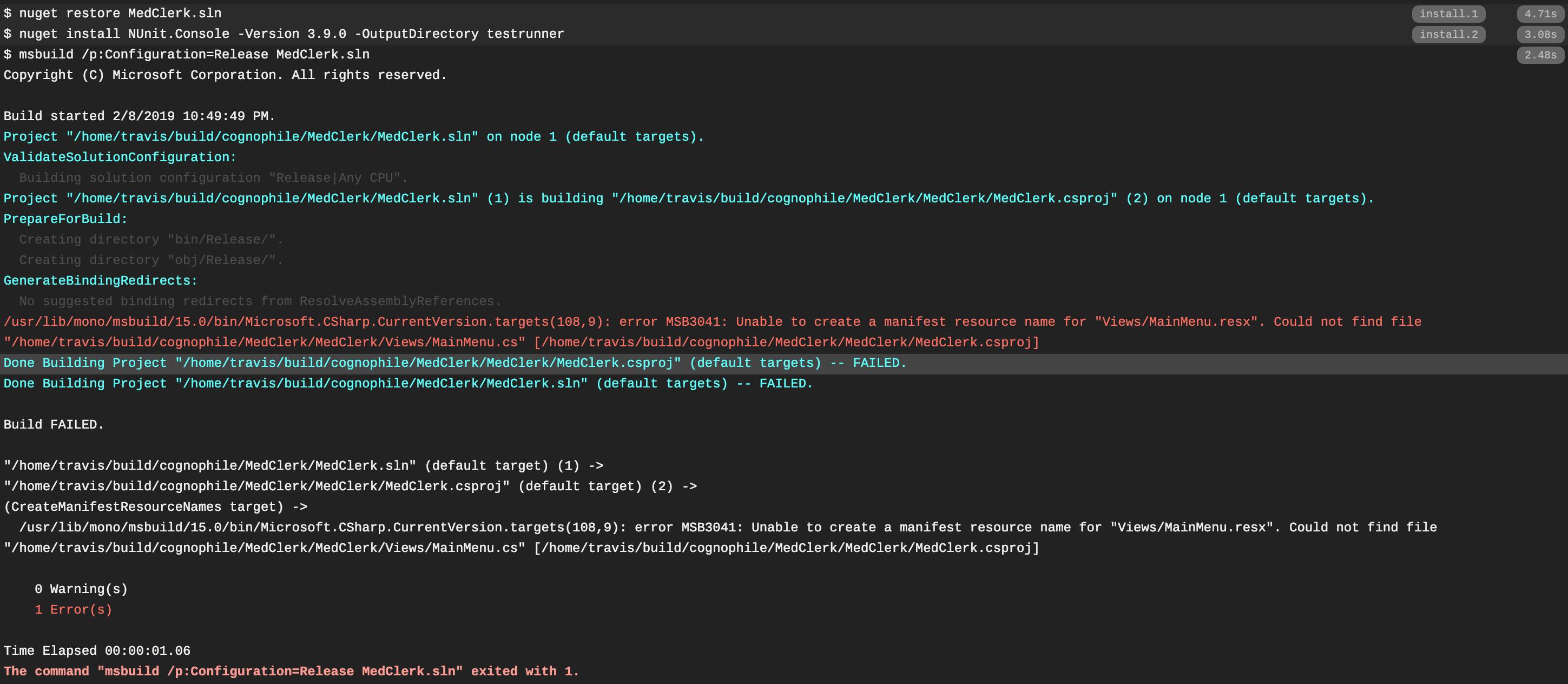

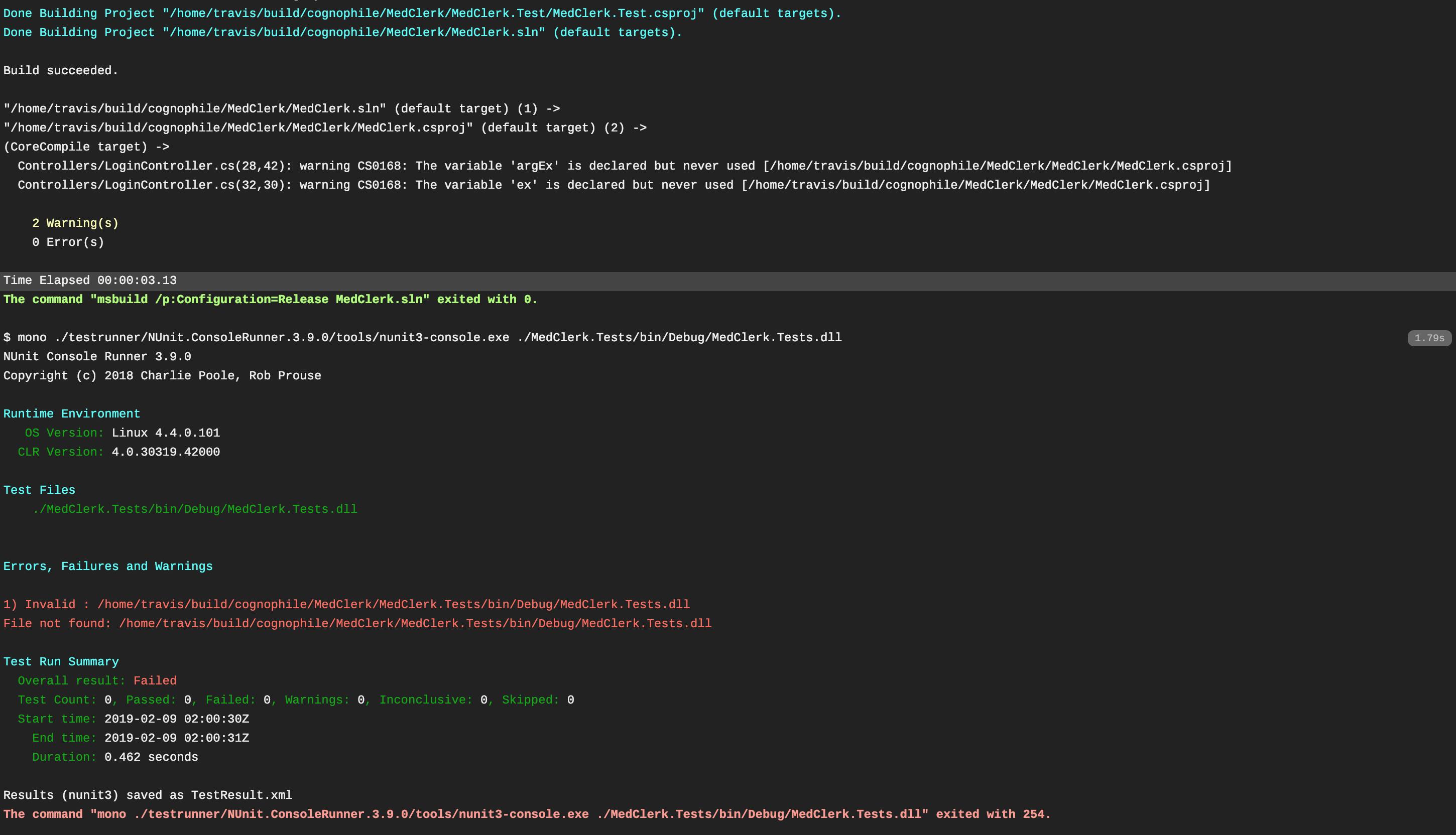

Execution

Safe to say I didn't expect things to run smoothly with this exploration, and sure enough, they didn't. I ran into a few problems over the course of the night, primarily relating to the project configuration.

Firstly, the build was failing thanks to an obscure file naming problem where one of the forms and its associated classes appeared to follow the same naming scheme (Views/MainMenu.*) and so the solution build would try to locate Views/MainMenu.* but only Views/mainMenu.* existed. Having spent a few minutes searching the solutions configuration files, I decided to move on as a simple enough fix existed thanks to Visual Studio - a quick refactor of the filename updated all references [cac44083@GitHub], including that pesky hidden one (wherever it was).

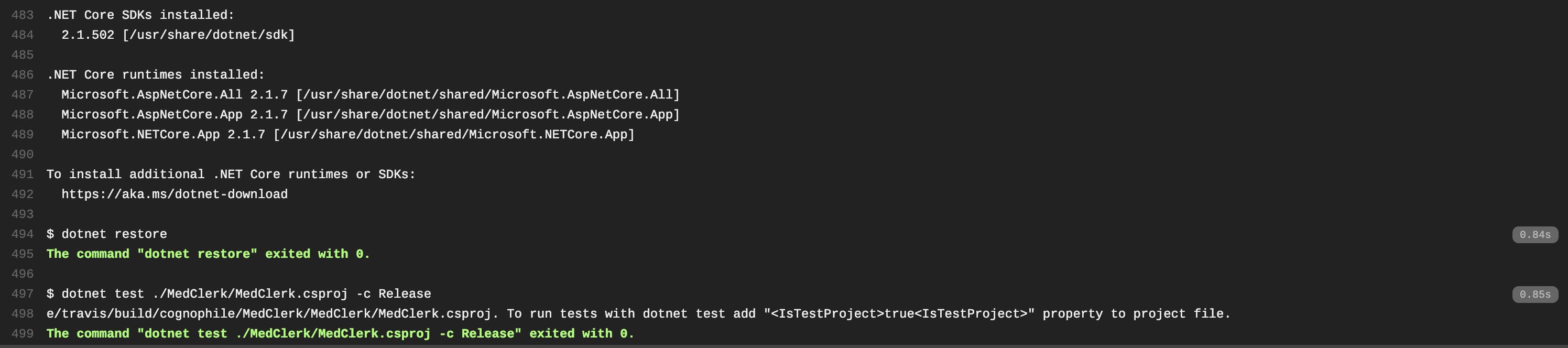

Later, there was a little confusion over which runtime was required to run nunit and msbuild. Eventually, I found the requirements for the way I'd written the configuration to be that mono was required for the test execution and dotnet for building. I'd attempted a few builds using purely mono or dotnet but neither fancied working. Looking back, now refreshed and fueled on coffee, I'd imagine the failure to run tests using dotnet test issue stemmed from the tests originally being included in the program project, not a dedicated build project (we'll get to that).

I decided that as to meet automated testing best practices for dotnet projects, I'd relocate the production-project contained tests into their own dedicated project under the solution. "Why? you might ask, well, as Jason Jackson kindly explained, it's mostly to avoid bloating the release candidate with unnecessary assembly references and generally respect the separation of concerns rule as to not ship tests with production code/executables.

So, I set out to refactor the tests into their own project, which wasn't all that painful. It meant adding a new NUnit project to the solution. For the uninitiated, Grant Winney wrote a great step-by-step guide for Visual Studio on macOS. Once this was done, I moved the tests previously located in MedClerk/Tests/ into it and sure enough, it required adding a few assembly references (System, System.Data, System.Data.DataSetExtensions, System.Transactions, and System.Xml).

Adding NUnit was as simple as right-clicking Packages/ and clicking "Restore" to install the NUnit assemblies kindly linked to the project already by Visual Studio project creation process. A smooth process. Only a few issues came up regarding the version of NUnit which were fixed by ensuring the new MedClerk.Test/packages.config had the required package definition and target information:

<?xml version="1.0" encoding="utf-8"?>

<packages>

<package id="NUnit" version="3.9.0" targetFramework="net461" />

<package id="NUnit3TestAdapter" version="3.9.0" targetFramework="net461" />

</packages>

Oh, and I forgot to update the NUnit version reference in the MedClerk.Test.csproj for the test project which caused a momentary headache before tracking it down, later on. You can view all these changes in a0210888. Note the build hint path being a mismatch to the above package definition, as below. Easily fixed with a little edit to MedClerk.Test.csproj in 2b7a694. Here's the offender...

<Reference Include="nunit.framework">

<HintPath>..\\packages\\NUnit.2.6.4\\lib\\nunit.framework.dll</HintPath>

</Reference>

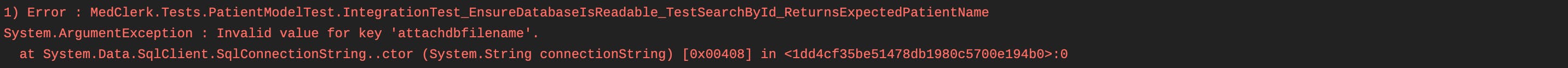

"Finally..." I thought, "Everything is in place for the build and it works, and now everything is set for tests to work. Awesome!". Nope. As it turns out, Travis isn't a big fan of local database files, MedClerk.mdf. Though unit tests were running, the integration tests would fail. My assertion is that since this file is unusable on Visual Studio on macOS, the same likely applies to the Travis environment (Ubuntu 14.04). Sure enough, executing tests on Windows and then macOS confirmed this by passing and failing, respectively.

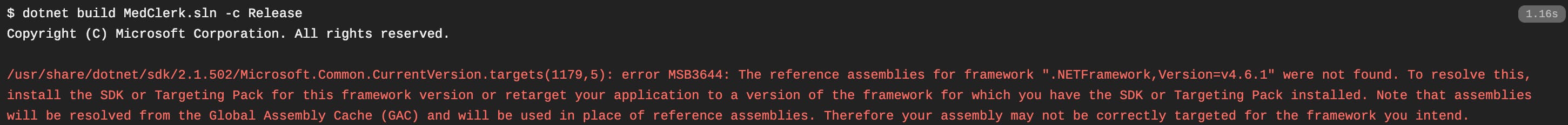

Seeing as some tests were running, clearly, it wasn't the build process causing the problem, but potentially the project configurations. So, I tried running the test stage with dotnet again since now, a dedicated project was available to target. With some tinkering of .travis.yml, I converted all tasks to use dotnet and adjusted the test projects .csproj to signify that under a release build, this project is a test using <IsTestProject>true</IsTestProject>. This revealed as I suspected, that the build would fail due to being run on .NET Core in Travis but specified as .NET 4.6.1 as the target framework in both project configurations.

As it turned out, building a .NET 4.6.1 WinForms application isn't straightforward with .NET Core, as it would spit out the following during the build.

Though it'd be nice to be rid of mono in all this, thanks to its installation being a significant portion of the 10 minute build execution time on Travis, but alas, here is where my journey came to a frustrating end. In March 2018, @akoeplinger commented on a similar issue that the .NET framework wasn't buildable with dotnet yet. Bugger.

With one final check of docs.microsoft, I attempted to specify the target framework in the build and test stages, as shown below. 10 minutes later... no-ball.

language: csharp

mono: latest

dotnet: 2.1.502

solution: MedClerk.sln

install:

- nuget restore MedClerk.sln

script:

- dotnet restore MedClerk.sln

- dotnet build MedClerk.sln -c Release -f net461

- dotnet test ./MedClerk.Test/MedClerk.Test.csproj -c Release -f net461

In the end, I reverted back to the working TravisCI configuration which resulted in a pass as the database integration tests were marked as skipped to enable the build to succeed, which will be documented to remind developers to re-enable these tests later once a new non-.MDF database is established. This way, the log shows the state of affairs with a passing build and (mostly) passing tests. Maybe I'll look into refactoring the application to use another database source; one more friendly with mono and Travis, for example, an actual SQL Server instance.

Wrapping up this palava

I'm happy with the experience of TravisCI on this project, thus far. I've limited experience of it, but I've more experience with competing tools in my professional work. I feel it's hard (and likely unfair) to compare them apples-to-apples as the configuration used at work is internally hosted and managed by integrating with our site-managed version control infrastructure, whereas in this scenario I was a consumer of the TravisCI service via GitHub.

But, I do think Travis did a good job of abstracting much of the responsibility away from the idiot end-user (hi there), without taking away customisability. Plus, its documentation is compact, easy to read, and gives you the fundamental knowledge (and code) to get something working. Whether it's comprehensive or custom is another matter for the implementer.

Lessons on reflection? I could have put a little more time into understanding nuances of the available build environments more; this can be achieved by not working on such things at 2 am Saturday morning due to my stubbornness to not leave without some form of success. But, "Nothing good ever happens past 2 am" - I should have heeded Ted Mosby's advice off the bat. On the bright side, this has opened a new opportunity for another project - investigating the ability to convert a .NET 4.6.1 WinForms project to .NET Core. Another project, another time.